This blog post delves into the key differences between RAG and LLM models, empowering you to choose the right tool for your specific needs. RAG model vs LLM model.

What is RAG Model?

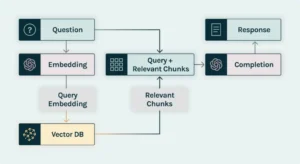

A RAG model, which stands for Retrieval-Augmented Generation model, is essentially a fact-checking and knowledge-boosting companion for large language models (LLMs).

RAG stands for Retrieval-Augmented Generation, and it helps AI writers ensure their creations are accurate and reliable by fetching information from trustworthy sources beyond their initial training data.

What is LLM Model?

An LLM model, which stands for Large Language Model, is a type of artificial intelligence (AI) model trained on a massive dataset of text and code.

Key Differences Between: RAG Model and LLM Model

RAG models (Retrieval-Augmented Generation) and LLMs (Large Language Models) are both powerful tools in the field of Natural Language Processing (NLP). However, they have distinct functionalities and approaches. Here’s a breakdown of 10 key differences Between RAG Model and LLM Model:

1. RAG Model and LLM model Core Function:

LLM Models– Imagine LLMs as the creative minds of text generation. They’re trained on massive datasets of text and code, learning the language’s statistical patterns. This allows them to generate different creative text formats, like poems, scripts, or even code.

RAG models, on the other hand, function differently. They act like information detectives, retrieving relevant details from external knowledge bases. This retrieved information is then used to enhance the accuracy and coherence of the text generated by an LLM. Think of it as a collaboration: the LLM provides the creative spark, while RAG ensures factual grounding.

2. RAG Model and LLM model Information Source:

- LLMs: LLMs rely solely on the information they learned during training. While this data can be vast, it becomes static unless the model is specifically retasked.

- RAG Models: RAG models boast an additional advantage: access to external knowledge bases. This allows them to stay up to date with the latest information and incorporate it into their text generation process.

3. RAG Model and LLM model Knowledge Updates:

- LLMs: Keeping an LLM’s knowledge current requires retraining on a new dataset, a process that can be time-consuming and resource intensive.

- RAG Models: Updating a RAG model is significantly simpler. New information can be seamlessly integrated into the external knowledge base, ensuring the model’s knowledge stays fresh.

4. RAG Model and LLM model Area of Focus:

- LLMs: LLMs excel at creative endeavors. They can generate different writing styles, translate languages, and produce various creative text formats, all based on the patterns they’ve learned.

- RAG Models: RAG models prioritize factual accuracy. They retrieve relevant information to enhance the coherence and reliability of the text generated by an LLM.

5. RAG Model and LLM model Explainability:

- LLMs: The inner workings of LLMs can be complex, making it challenging to pinpoint the exact logic behind their text generation.

- RAG Models: RAG models offer a degree of explainability. By highlighting the retrieved information sources used for generation, they provide insights into the reasoning behind the output.

6. RAG Model and LLM model Data Requirements:

- LLMs: Training an LLM requires a massive dataset of text and code, which can be a significant hurdle depending on the project’s scope.

- RAG Models: RAG models have two data requirements: an LLM model and a well-curated external knowledge base.

7. RAG Model and LLM model Computational Cost:

- LLMs: Training and running LLMs can be computationally expensive, especially for larger and more complex models.

- RAG Models: Retrieving information from the knowledge base adds an extra computational step, potentially increasing the cost compared to a standalone LLM.

8. RAG Model and LLM model Applications:

- LLMs: LLMs shine in creative content generation tasks, from writing blog posts to translating languages and composing poems or musical pieces.

- RAG Models: RAG models are ideal for tasks requiring factual accuracy and knowledge integration. They excel in question answering, summarizing factual topics, and generating reports.

9. RAG Model and LLM model Development Stage:

- LLMs: LLM technology is a more established field, with continuous advancements in model size, capabilities, and training techniques.

- RAG Models: RAG models are a relatively new approach in NLP, with ongoing research and development to unlock their full potential.

10. A Team Effort of RAG Model and LLM model

- LLM & RAG: While seemingly different, RAG and LLM models are not competitors. They can be effectively combined, with RAG models enhancing the factual accuracy.

Conclusion

LLMs Model and RAG models are a powerful duo. LLMs craft the text, while RAG models ensure accuracy by acting as a built-in fact-checker. This collaboration elevates AI text generation to new heights.

People Ask Questions

What is the difference between rag and LLM?

1 thought on “10 Key Differences Between RAG Model and LLM model”