In the ever-evolving world of technology, Google has always been at the forefront, pushing the boundaries of what’s possible. One of their latest ventures, Project Astra, is set to redefine our interaction with AI assistants.

What is Google Project Astra?

Project Astra is Google’s ambitious new venture into the realm of multimodal AI agents. It’s a leap forward from the current AI assistants, offering a more integrated and contextual experience.

Project Astra Multimodal Capabilities

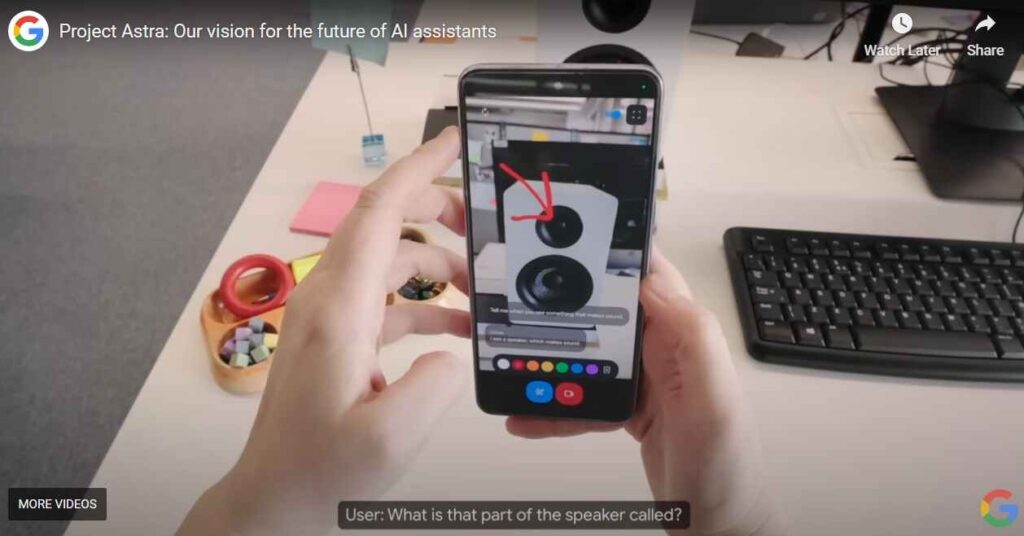

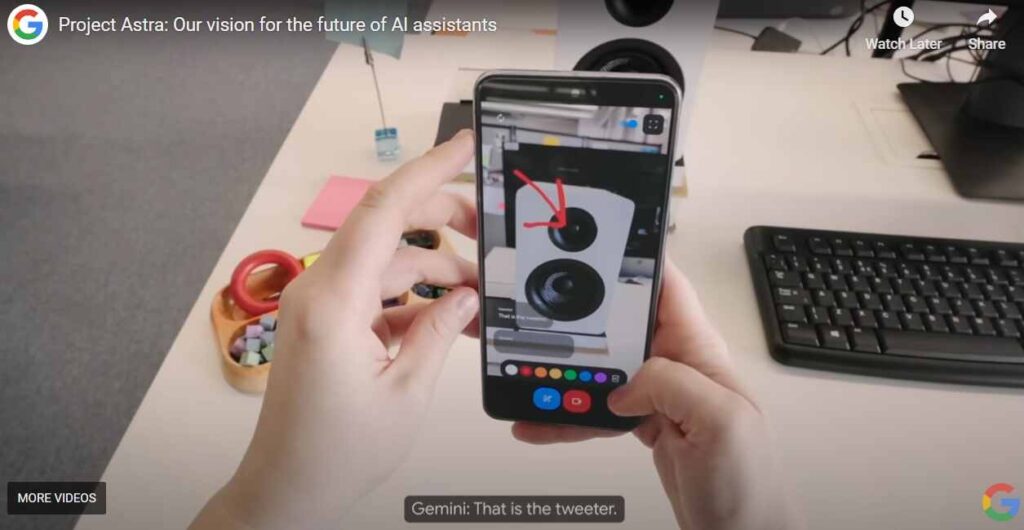

The standout feature of Project Astra is its multimodal capabilities. Unlike traditional AI assistants that rely solely on voice or text input, Project Astra can process information from text, video, images, and speech simultaneously. It’s like having an assistant that not only hears you but also sees the world around you through your smartphone camera.

Contextual Responses

Project Astra takes AI interaction to the next level with its ability to provide contextual responses. By connecting different types of input, it can generate responses that are more relevant and personalized. Whether you’re asking a question or seeking assistance, Project Astra understands the context and responds accordingly.

Real-Time Assistance

With Project Astra, help is just a question away. It offers real-time assistance, providing instant responses to your queries. It’s like having an advanced version of Google Lens at your disposal.

Advanced Seeing and Talking Responsive Agent

During a demonstration, Project Astra showcased its impressive capabilities. It identified sound-producing objects, provided creative alliterations, explained code on a monitor, and even helped locate misplaced items.

The Future of Google Project Astra

While Project Astra is still in the early stages of development, Google has hinted at integrating some of its capabilities into products like the Gemini app later this year. As we look forward to the future, Project Astra promises to revolutionize our interaction with AI assistants.

Related Post-Key Differences Between RAG Model and LLM model

Conclusion

In conclusion, Google’s Project Astra is a game-changer in the world of AI assistants. With its multimodal capabilities and contextual responses, it offers a more integrated and personalized user experience. As we eagerly await its launch, one thing is certain – the future of AI assistants is here, and it’s called Project Astra.

People Ask Questions