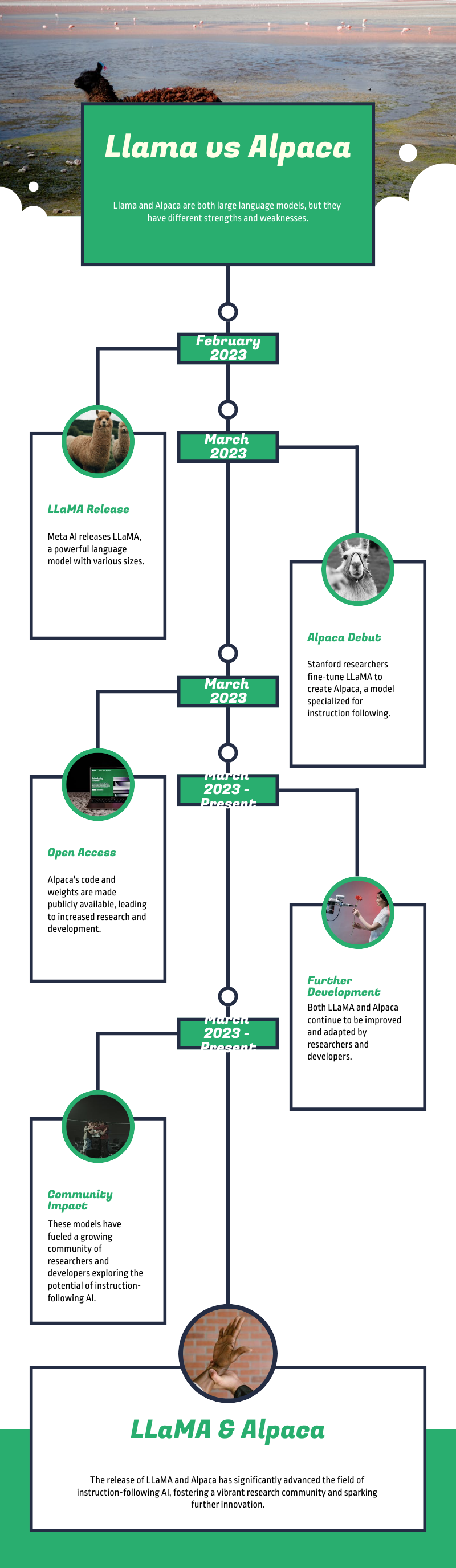

In the vast cosmos of AI, LLama vs Alpaca Ai are two shining stars. This article will illuminate their differences and help you choose the right one for your journey.

Table of Contents

ToggleWhat is LLama Model

The LLaMA model is a language model developed by Meta. It’s really good at understanding and generating text, like writing emails, translating languages, and even telling stories. LLama comes in different sizes to fit various needs, making advanced AI more accessible for research and development.

What is Alpaca Ai

Alpaca AI is a fine-tuned version of the LLaMA model, developed by researchers at Stanford University. It was created to mimic the behavior of OpenAI GPT models, but at a lower cost. Alpaca is specifically designed for instruction-following tasks and can perform various natural language tasks, such as text generation and question answering.

Which AI model is best LLama vs Alpaca Ai?

-

Purpose:

- LLaMA: Designed for a wide range of natural language processing (NLP) tasks.

- Alpaca: Fine-tuned for instruction-following tasks.

-

Development:

- LLaMA: Developed by Meta AI.

- Alpaca: Developed by Stanford’s Center for Research on Foundation Models (CRFM).

-

Model Size:

- LLaMA: Available in various sizes, from 7 billion to 405 billion parameters.

- Alpaca: Based on the LLaMA 7B model.

-

Training Data:

- LLaMA: Trained on a diverse range of text data.

- Alpaca: Trained on 52,000 instruction-following demonstrations.

-

Performance:

- LLaMA: Strong performance on NLP benchmarks.

- Alpaca: Aims to replicate the behavior of models like OpenAI’s text-davinci-003.

-

Cost-Effectiveness:

- LLaMA: Larger models may be more resource-intensive.

- Alpaca: Designed to be more cost-effective.

Related Comparisons- LLama 2 vs mistral / Jurassic-2 vs GPT-4

LLama vs Alpaca Ai Model: Comparison Chart

| Feature | LLaMA | Alpaca |

|---|---|---|

| Purpose | General NLP tasks | Instruction-following tasks |

| Developer | Meta AI | Stanford CRFM |

| Model Size | 7B to 405B parameters | Based on LLaMA 7B |

| Training Data | Diverse text data | 52,000 instruction demos |

| Performance | Strong on NLP benchmarks | Similar to text-davinci-003 |

| Cost-Effectiveness | Varies with model size | More cost-effective |

Choosing the Right Model: LLama vs Alpaca Ai

- General NLP tasks: Choose LLaMA.

- Instruction-following tasks: Choose Alpaca.

People Ask Questions

Is LLaMA better than GPT?

It depends on the task. LLaMA is more cost-effective and open-source, while GPT-4 generally excels in complex tasks and high-level reasoning.

What is LLaMA by Meta?

LLaMA (Large Language Model Meta AI) is a family of large language models developed by Meta AI, designed for various NLP tasks.

Is LLaMA 3 better than ChatGPT 4?

LLaMA 3 competes well in some benchmarks but GPT-4 generally has better performance in complex scenarios.

Is LLaMA free?

Yes, LLaMA models are free for both commercial use and research.

How big is LLaMA AI?

LLaMA models range from 7 billion to 405 billion parameters.